Statistics Cross Validation

Cross-validation is a powerful technique used in statistics and machine learning to assess the performance and generalization capabilities of models. It plays a crucial role in model evaluation, helping practitioners make informed decisions about model selection, parameter tuning, and understanding the robustness of their models. This technique is particularly valuable when working with limited data, as it allows for a more accurate estimation of model performance without requiring a separate validation set.

Understanding Cross-Validation

Cross-validation involves dividing the available data into complementary subsets, or folds, and then using these folds to train and validate the model iteratively. The main idea is to use different subsets of data for training and validation in each iteration, ensuring that the model is evaluated on data it has not seen during training. This process helps to estimate the model’s performance and reduce the risk of overfitting, where the model performs well on the training data but fails to generalize to new, unseen data.

Types of Cross-Validation

Several types of cross-validation techniques exist, each with its own advantages and use cases. The choice of cross-validation method depends on factors such as the size of the dataset, the complexity of the model, and the specific evaluation goals.

Holdout Cross-Validation

In holdout cross-validation, the dataset is split into two subsets: a training set and a test set. The model is trained on the training set and evaluated on the test set. This method is simple and computationally efficient, making it suitable for large datasets. However, it may not provide a robust estimate of model performance if the test set is small or not representative of the overall data distribution.

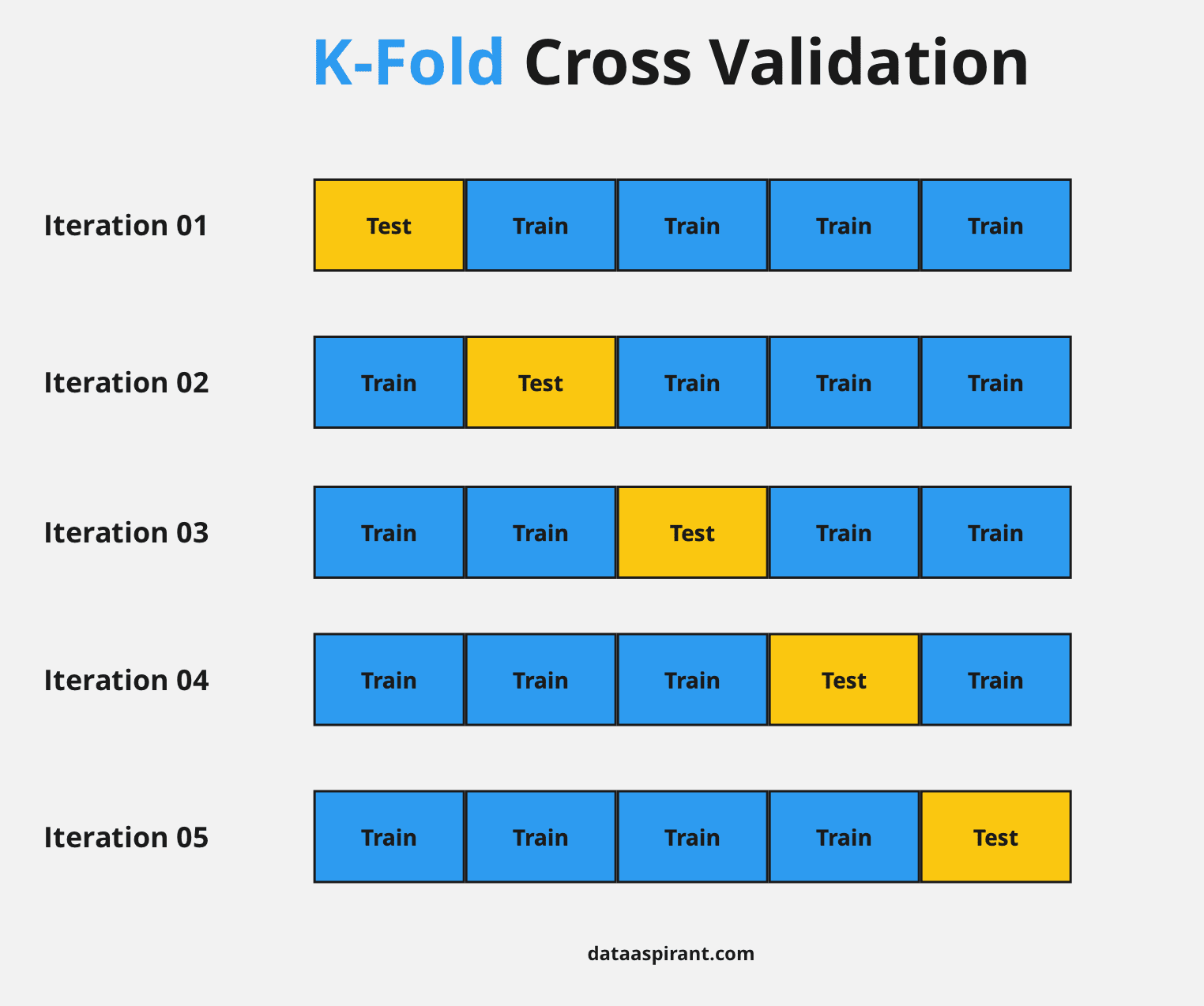

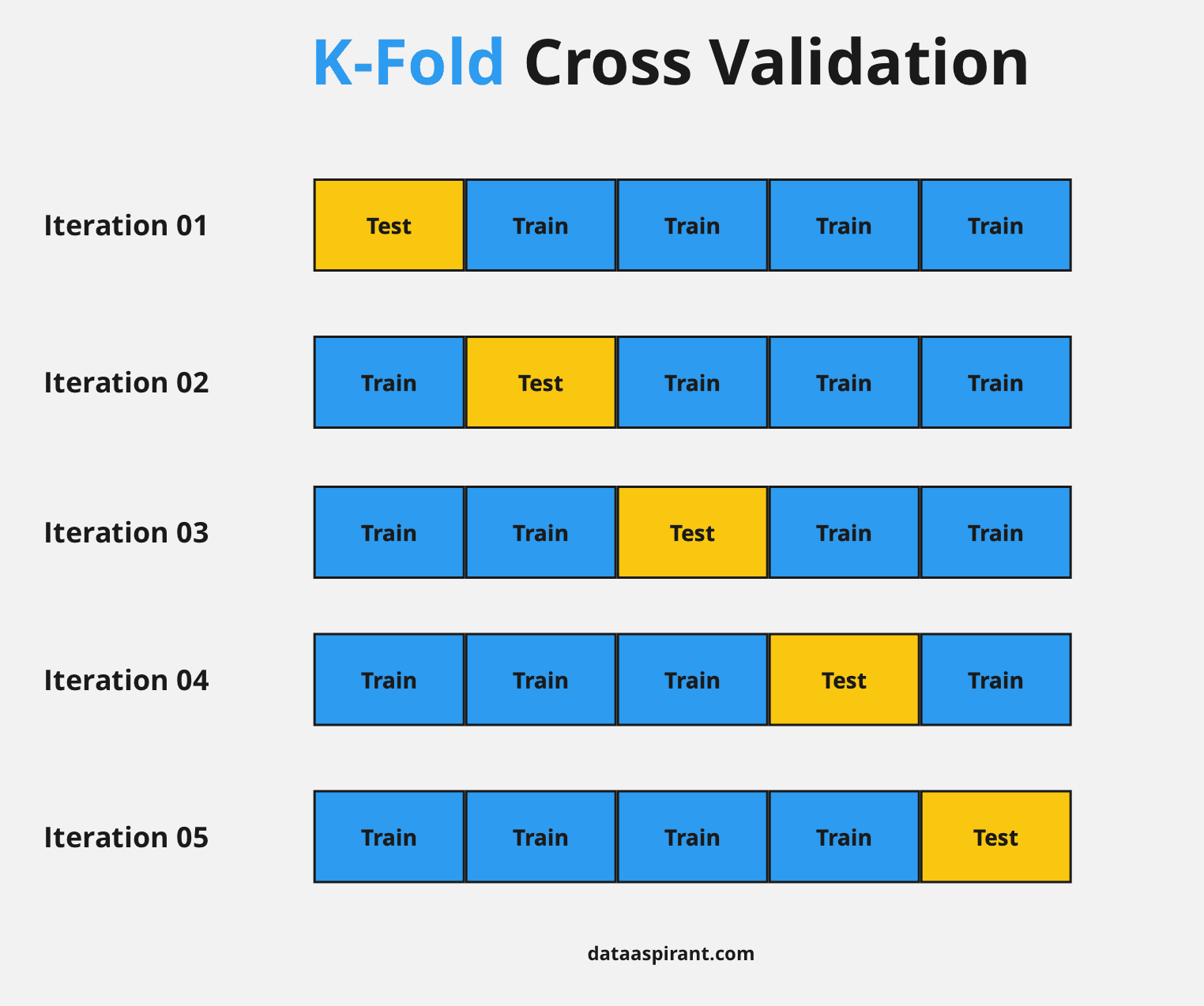

K-Fold Cross-Validation

K-fold cross-validation is a more robust approach that divides the dataset into k mutually exclusive subsets, or folds. The model is then trained and validated k times, with each fold serving as the validation set once and the remaining k - 1 folds as the training set. This technique provides a more accurate estimate of model performance by utilizing all data points for both training and validation. The average performance across the k iterations is used as the final performance metric.

Leave-One-Out Cross-Validation (LOOCV)

LOOCV is an extreme case of K-fold cross-validation where k is equal to the number of data points in the dataset. In each iteration, a single data point is used as the validation set, and the remaining data points form the training set. This method provides a more precise estimate of model performance but can be computationally expensive, especially for large datasets.

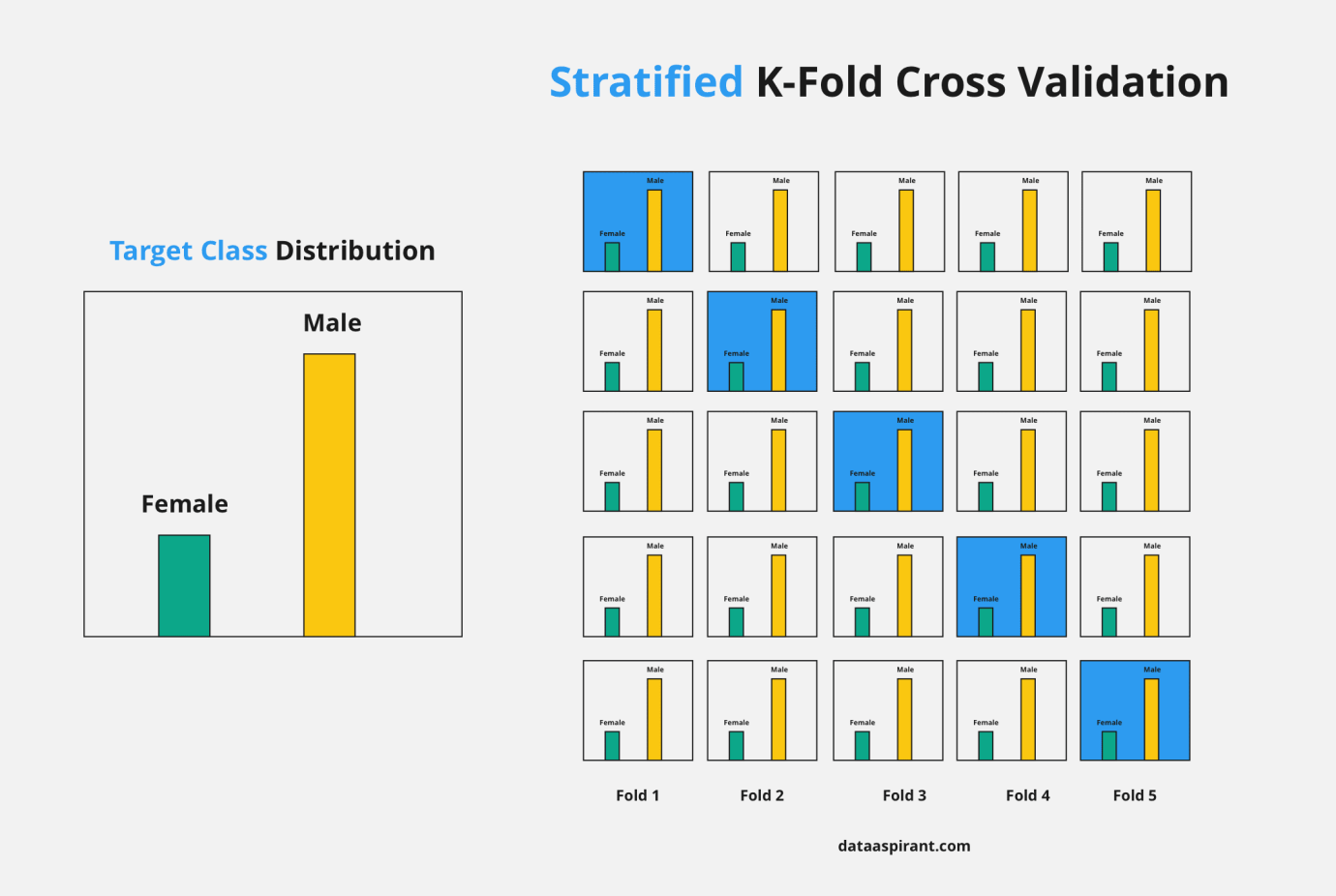

Stratified Cross-Validation

Stratified cross-validation is a variation of K-fold cross-validation that ensures each fold has the same class distribution as the entire dataset. This technique is particularly useful when dealing with imbalanced datasets, as it helps maintain the relative class proportions in each fold. By preserving the class distribution, stratified cross-validation provides a more reliable estimate of model performance, especially for minority classes.

Applications and Benefits

Cross-validation has wide-ranging applications across various fields, including but not limited to:

- Model Selection: Cross-validation helps compare the performance of different models, aiding in the selection of the most suitable model for a given task.

- Hyperparameter Tuning: It is used to optimize hyperparameters by evaluating the model's performance across different parameter configurations.

- Feature Selection: Cross-validation can assist in identifying the most informative features by evaluating the model's performance with different feature subsets.

- Time Series Analysis: In time series data, cross-validation helps assess the model's ability to forecast future values based on historical data.

- Robustness Assessment: By evaluating the model's performance on different subsets of data, cross-validation provides insights into the model's robustness and its ability to generalize.

Performance Evaluation Metrics

The choice of performance evaluation metric depends on the specific problem and the nature of the data. Some commonly used metrics include:

| Metric | Description |

|---|---|

| Accuracy | Proportion of correctly classified instances. |

| Precision | Ratio of true positive predictions to the total number of positive predictions. |

| Recall (Sensitivity) | Ratio of true positive predictions to the total number of actual positive instances. |

| F1 Score | Harmonic mean of precision and recall, providing a balanced measure of model performance. |

| Mean Squared Error (MSE) | Average squared difference between predicted and actual values, commonly used for regression tasks. |

Challenges and Considerations

While cross-validation is a powerful tool, it comes with certain challenges and considerations:

- Computational Cost: Some cross-validation techniques, especially LOOCV, can be computationally expensive, particularly for large datasets.

- Data Leakage: Care must be taken to avoid data leakage, where information from the validation set inadvertently influences the training process.

- Model Complexity: Highly complex models may require more data for accurate cross-validation, as they are more prone to overfitting.

- Hyperparameter Tuning: Cross-validation can be time-consuming when used for hyperparameter tuning, especially with a large number of hyperparameters.

Best Practices and Tips

To ensure effective use of cross-validation, consider the following best practices:

- Randomization: Randomly shuffling the data before splitting it into folds helps ensure that the folds are representative of the entire dataset.

- Multiple Runs: Repeating the cross-validation process multiple times with different random seeds can provide a more robust estimate of model performance.

- Avoiding Overfitting: Cross-validation helps prevent overfitting by providing an estimate of the model's performance on unseen data.

- Hyperparameter Optimization: Cross-validation is essential for hyperparameter tuning, as it allows for the selection of the best hyperparameters based on the model's performance across different folds.

How does cross-validation help prevent overfitting?

+Cross-validation helps prevent overfitting by providing an estimate of the model’s performance on unseen data. By evaluating the model on different subsets of the data, cross-validation ensures that the model is not overly specialized to the training data and can generalize well to new, unseen instances.

What is the main advantage of K-fold cross-validation over holdout cross-validation?

+K-fold cross-validation provides a more robust estimate of model performance by utilizing all data points for both training and validation. This ensures that the model is evaluated on a larger portion of the data, reducing the impact of data variability and providing a more reliable performance metric.

How can cross-validation be used for feature selection?

+Cross-validation can be used for feature selection by evaluating the model’s performance with different subsets of features. By comparing the performance across different feature combinations, practitioners can identify the most informative features and optimize the model’s performance.